Update: A Message for the Future

May 4, 2018

There are signs. But what good are signs without letters? Think you can just type out your message with your computer keyboard? What keyboard? You're in the wilderness, friend. Letters don't just grow on trees.

When I was a kid, my father had one of those black plastic sign boards with removable white letters in the lobby of his business. When he wasn't looking, I re-arranged the letters to make the sign say something much more interesting.

Actually, this happened when I was an adult, not when I was a kid....

But how long do you really think your precious little message is going to last, knowing the kids these days? My father was not thrilled, and you will not be thrilled either.

So you gotta lock that sign. My father never thought of that. Apparently, in 35 years of running his business, he never fathomed that a bad seed like me would come waltzing through his lobby.

But there are no bad seeds in this game, so you probably don't have to worry about it. Try putting a second sign next to your first sign that says, "Please do not change the sign." Or was that "Inspect Neonatal Hedgehogs"?

Yeah, so, lock your sign, daddy-o.

And hey, since signs are containers, that means we can lock some other containers now too.

| Update: Fixing a bunch of things

April 28, 2018

This week's update involves a lot of maintenance and tweaking.

Decay times have been increased dramatically along with tool usage counts, but the tool usage engine has also been overhauled to fix some bugs and inconsistencies, and also to switch to a semi-random breakage model.

For example, an ax can be used 100 times on average, and goes through four use states, with an average of 25 uses per state (1/25 chance of advancing to the next state). Worst case, which would occur once in about 400,000 axes, would be an ax that can only be used 4 times. Contrast this with a purely random usage model where the ax has a 1/100 chance of breaking. We'd still expect 100 uses on average, but we'd also expect an ax that breaks on the first use to happen once in 100 axes, which would be a frustratingly high rate.

Various natural resources have been tweaked, and a new way to deep mine exhaustible iron ore has been added.

Also, for those of you who might have missed it, a new Eve placement algorithm is live, which you can read about here:

http://onehouronelife.com/newsPage.php?postID=1310

The plan for next week is solid content creation. I'm hoping to take a break from programming and tweaking next week. And signs are coming.

| New Eve placement algorithm

April 24, 2018

The way Eves are placed has a substantial impact on the feeling of the game. Whenever a player joins the server when there is no suitable mother for that player, that player spawns as a full-grown Eve instead of as a baby. Eve serves as the potential root of a new family tree, and her placement determines the opportunities that are available to that family.

Originally, Eves were placed at random inside an arbitrary radius around the world location (0,0).

This worked fine for a while, until that area began to fill up with civilization. Eve is supposed to feel like a fresh start, with maybe a small chance of stumbling into the ruins of a past civilization, or eventually bumping up against a living, neighboring civilization. As the center of the map got full, Eve was always just a stone's throw away from a village. Furthermore, with everyone so close together, there was no danger of losing a village if it died out. Thus, keeping a village alive meant nothing. We could always find our way back to revive the ghost town tomorrow. Even worse, as these areas got ravaged by human activities, the resources that a new Eve needs to bootstrap became more and more scarce. Eve does need a somewhat green pasture to found a new civilization.

The next Eve placement algorithm involved a random walk across the map, looking at the last Eve location and making a random jump 2000 tiles away to place the next Eve. There can be some randomly-occurring back-tracking with this method, which means that Eve can sometimes end up near the ruins of old civilizations, but we expect such a random walk to eventually explore the entire map, so we will also get farther and farther from center over time. And with many Eves dying without founding new civilizations, and also perhaps due to biases in the random coordinate generator, we quickly walked our way from (0,0) out into the 50,000's. This meant that villages were generally too far apart to interact with each other. Still, Eve was usually in an untouched area full of natural resources.

The next Eve placement algorithm was radial, placing Eves randomly at a radius of 1000 from a fixed center point. This put all villages within trading distance of each other, and offered plenty of untouched space for Eve---for a while. But soon, the "rim" of the wheel filled up with civilization, and we were back to where we started---Eves placed in a crowded area that was stripped bare of natural resources.

The latest Eve placement algorithm was suggested a long ago by Joriom and maybe a few other people, and involves an ever-growing spiral around a fixed center point. This guarantees that Eve is always in an untouched area, but also that she is never too far from some recent civilizations, so trade can happen.

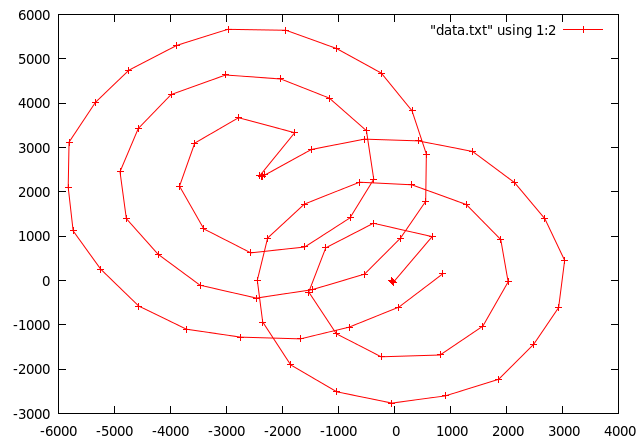

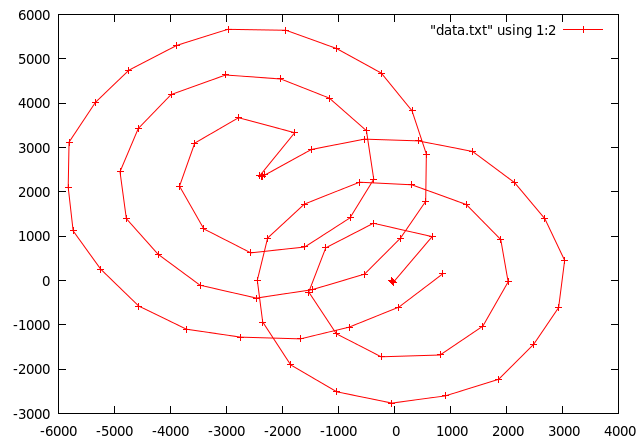

While a server is running, the placements look like this:

You can see the three initial Eve placements at the center, which the server permits at startup to ensure that the first few Eves can have a chance to bootstrap a village in that spot. After that, the spiral ensues, and would keep going as long as the server was running.

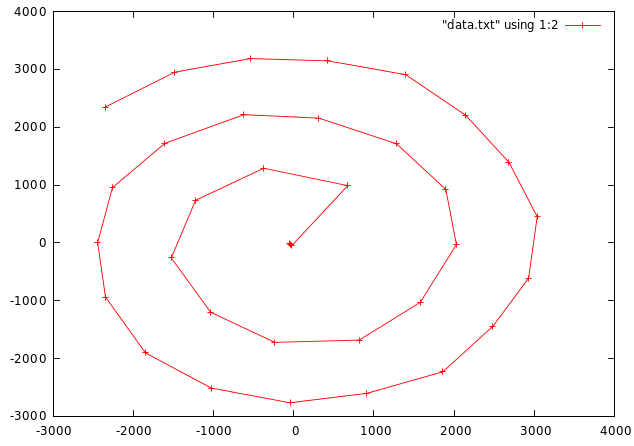

What happens when a server shuts down though, as it does every week during updates?

First, the death location of the longest-lineage person during shutdown is remembered. This is used as the "center" of the spiral at the next startup, and after startup, the first three Eves are placed near there. After that, a new spiral grows around that new center point, like this:

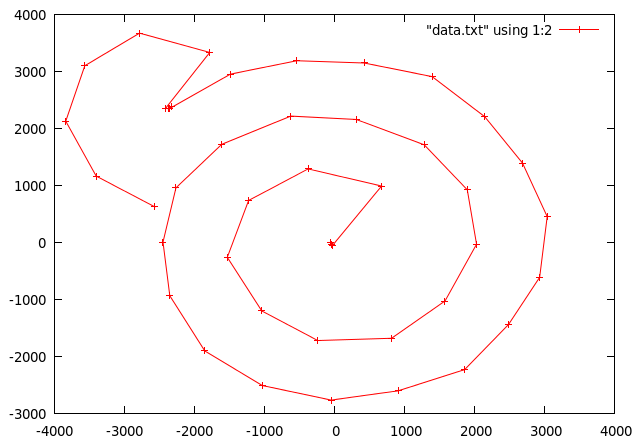

As this new spiral grows, it will cross through the previous spiral like so:

That is okay, because any of the other civilizations that were active at shutdown will potentially be rediscovered by Eves, which makes for an interesting Eve variation. Still, future Eves will not be trapped in these already-developed areas, as the spiral will continue further out into untouched areas again.

Also, some of the older, long forgotten villages in the old spiral will be wiped when the server restarts due to the 24-hour abandonment cut-off. Thus, the new spiral will cross through many now-wild areas, even as it overlaps the old spiral.

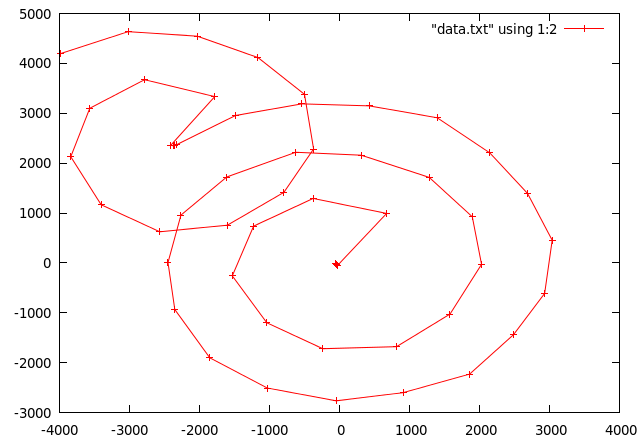

As the new spiral grows bigger, it will eventually engulf the old spiral and move beyond it, but the overlapping area will always be substantially less than half of the new spiral. Thus, even if 100% of the old spiral contained active villages that were not wiped, more than half of the Eves in the new spiral will be placed in untouched wilderness.

| Update: From Riches to Rags

April 21, 2018

75 new things. Mostly broken things. Rotting things. Fragile things.

And stone walls, which are the opposite of all of that. And locking doors.

A whole new web of interdependence for farming.

A new mother selection method (temperature, not food).

| Update: The Monument

April 14, 2018

If you build it...

We crossed one million lives lived inside the game this week. It's kind of mind boggling. And a group of well-coordinated players also trounced the previous lineage record of 32 generations, making it all the way up to 111 generations with the Lee family. I've posted the 111 names from the matriarch chain here.

I've tied all these trends together in this update, which includes a monument, along with quite a few other changes.

In light of the recent server performance updates, the per-server player cap has been raised.

The experience of being murdered has been dramatically improved. (Yes, that is a peculiar sentence.) It has never actually happened to me in game yet, and I hope it will never happen to you. But if it does, you'll have a small bit of time to get your affairs in order...

Long term food sustainability has been made much harder. Getting up to 30+ generations in one spot should be a pretty substantial challenge, and you won't be able to do it on carrots alone. The top has been hardened.

Short term food availability has been made a bit easier, with the addition of two more edible wild plants. But they don't grow back, and they can't be domesticated, so they don't affect the long term challenge. Being an Eve in the wilderness should be a little bit less stressful. The bottom has been softened.

And there may be one little tiny surprise in there too... the idea came to me while I was falling asleep last night... I just had to put it in.

Also, monument logging is in place server-side, but the processing and display of those logs is just a counter for now. A better monument roster will be implemented next week, assuming that it's needed. And yes, that is supposed to be a challenge.

|

|

|